SYSTEMIZING FRANKLY'S R&D

This project is primarily focused on my research process. If you would like to see more pixels, please take a gander at my chat SDK work. For a better picture of my design thinking, head on over to my news publishing project.

OVERVIEW

Frankly is a one-stop-shop for hundreds of local news stations, both large and small, each with their own unique branding and dedicated userbase. In an effort to scale our designs and the research to support our feature build-out, we recognized our need to systemize both our process and end-product.

In doing so, we hoped to steer our overarching property designs toward higher standards with as little resources needed from our teams to focus on keeping them on the right path as we moved forward in creating our next generation products. Ultimately, in better understanding our user's needs, and setting the foundation for a research-backed templating system, we were able to shave a development process down from 14-16 weeks to 4 weeks.

MY ROLE

Under the Head of Design, I lead the UX research and design initiatives, spearheading the systemization of these efforts. Together, the design team and I worked side-by-side in generating a design system for our clients and an internal R&D system for our product features. I lead and enforced user studies and client research to establish data-driven personas, use cases, analytics, and optimized templates for new and existing clients.

DELIVERABLES

We created an interactive design system, accessible from our platform's help center, to keep station designers on track as they built their websites, apps, and OTT properties. I established a similar research guide and workshop for our internal teams to maintain standards in design onboarding, conceptualizing, testing, and reporting efforts across multiple products and features, as well as Sketch libraries for our modular and interactive designs.

THE OPPORTUNITY

"HEY, LAUREN, CAN YOU MAKE SOMETHING REAL QUICK?"

The project, like most, did not arrive at my feet in a nicely packaged box with a bow. It began with the head of design coming to me asking for optimized site designs for a large prospective client who needed to be wowed. I am not one to simply slap something together that looks nice, I wanted to make sure whatever deliverables we provided had some actual numbers behind it. In this case, they were coming to Frankly with the hopes that we could give them a content publishing solution that increased engagement and put more money in their pocket.

My first question was "who are their users?".

I was shocked to discover that even though this is one of the largest news media groups in the world, they had no idea who their readers were nor what they wanted.

My second question, then, was "can they get us in contact with some of their users so we can conduct testing and interview sessions?".

Again, another roadblock - their legal parameters protected their users' privacy to such a degree that it would be impossible to get in direct contact with their readers through them as a resource.

With no way to gain access to the clients' userbase directly through the client, I needed to develop a system that would identify user preferences for multiple client types by simple, systemized questionnaires that would not breach privacy standards, and follow up with appropriate, data-driven design suggestions.

We were facing a development timeline of about 14-16 weeks from assessment to release for each new client. So much of the process was done manually, creating tangents in our system that had to be addressed by an excess of resources to maintain custom builds we were making for each individual brand.

My goal was to shave it down to 4 weeks with a better, more automated system, saving us time and a lot of resources.

ASSESSMENT

WHAT IS OUR CURRENT CLIENT ONBOARDING SYSTEM AND HOW CAN WE IMPROVE IT?

Before jumping into this project, I wanted to first visualize our flow from requirement gathering to new site launch with new and existing clients. Whatever we learned needed to be implemented by others in the company, making their lives EASIER rather than more complicated with a new system that better ensures adoption. Coworkers are users too!

I had already been working with multiple news media groups in creating templates for their local stations located across the US, and had been through many meetings and iteration reviews that all seemed to need the same things in different packages. I had also made it a point to interview everybody in the company who regularly interfaced with our clients, and had joined multiple onboarding and training sessions at some of the stations to get a sense of what the design process was from beginning to end. I began seeing patterns of need, roadblock, frustration points, and resource drain.

Our current process showed excessive design loops that could easily be automated by a slicker, more systemized requirement gathering period.

We had just recently started moving in a more modular direction with our design options, yet how we were presenting them to our clients was far too abstract. It may be easy to visualize for a designer, but not for a client. In addition, we needed to help guide them toward GOOD design decisions, backed by data. We needed to take more control and offer them fewer options that promised higher impact.

HIGH LEVEL GOALS

BRINGING EVERYBODY ONBOARD

In order to establish markers for success, I drew up a list of high level goals to share with the team and higher ups for approval. I wanted to make sure this effort held major water in our daily focus - as a lean design team, we all have a million things to accomplish. Systemizing process needed to take precedent so we could work with greater efficiency; we couldn't put it off any longer.

Our head of design should not have to be on any of the onboarding calls. Period. It is a waste of his time and our resources.

Our design team should be focused on building a system that can reach MANY, we are not an agency.

Our Client Services team should be educated in design enough to suggest appropriate design choices made by the design team at time of onboarding, and be well versed in the supporting numbers that govern these choices.

Our research efforts should be systemized, ongoing, and should span multiple demographic areas. It should be conducted by more than one person and understood and supported by all product managers.

We should be able to understand and bucket a client and their userbase with a series of smart questions (without having to get in contact with their users), and be able to offer development ASAP.

ANALYSIS

STARTING WITH WHAT WE HAVE

I immediately dove into our analytics, identifying typical flows, major bounce instances, our highest converting pages/design elements, demographic proclivities, and ad revenue patterns. It became clear to me that the homepage boasted the highest value ad spaces, and there were, indeed, a good number of users who regularly go directly to the homepage, but the bounce rates were all over the place. I needed to understand why and if there was room for an increase in engagement based on missed opportunity. Secondly, the article page has become the new "homepage" for content providers, as many of their users come from social links, but many failed to go deeper within the site and the bounce rates were also fairly high. There was clearly some room for improvement.

For the sake of designing a research system that can be applied to multiple feature build-outs, I chose to focus on studying users who typically go directly to their local news homepage. This would bring focus to the project, as well as help us get a sense of general brand opinion and preference in connection to readership behavior.

RESEARCH

WHY DO PEOPLE READ THE NEWS?

Before jumping into pixels, I wanted to first understand the underlying motivations behind users frequenting local news sites, and see if there might be patterned indicators of their preferences and behaviors.

I recruited several users across my identified demographics who each reported to frequent one or several local news sites, and conducted one hour interview sessions with each to uncover motivations, behaviors, reading/brand preferences, accessibility limitations and device usage. I kept the remaining 100 survey responses (answered by hopeful participants) to include in my research and to share with the team. These sessions were conducted in our San Francisco office.

I used four sites to test: our client's, the users preferred local news site, and two typical stations with different design layouts.

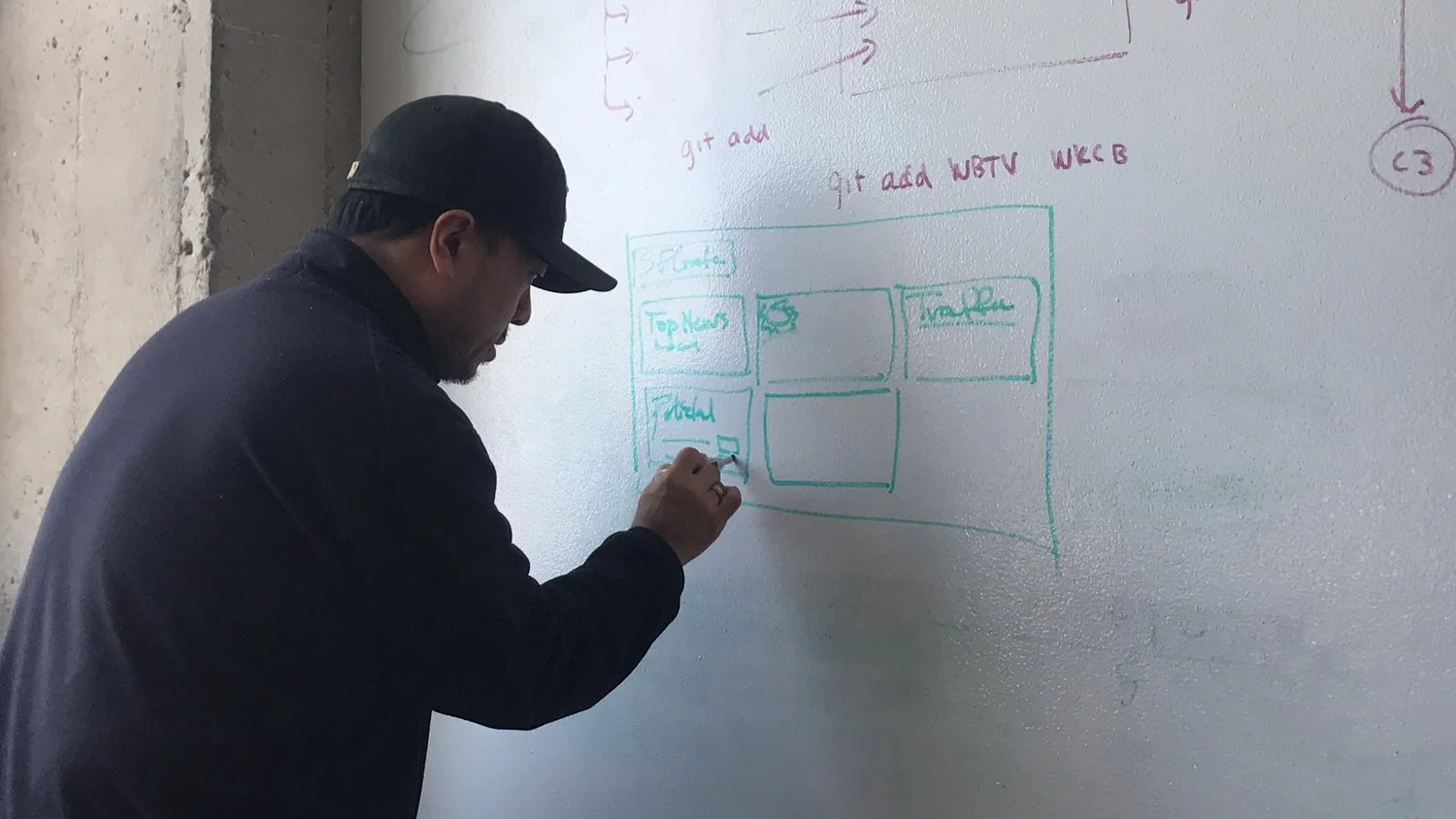

My sessions typically start with open-ended get-to-know-you questions, allowing the user to tell me things I might otherwise not even think to ask, which I implemented here. I then followed up with a task list, last impression rating, and a "draw your ideal experience" exercise on the whiteboard.

A typical session looks a bit like this.

KEY FINDINGS

USERS' READING, BROWSING, & SCANNING PREFERENCES VARIED

Some users prefer to scan text while others prefer photos/video. This was typically marked by demographic and device usage.

FREQUENT VISITORS ALSO HAD THE HIGHEST REPORTED BOUNCE RATES

Frequency of getting news updates were positively correlated with number of total news sources read and perceived update rate of target news site.

IMPRESSION TESTS REVEALED COMMON WORD PATTERNS

Language to describe look and feel of a given site was very telling of user values and was recognized as a reliable indicator of behaviors and reading/brand preferences. Users used words like "boring" vs "exciting" and "informative" vs "sensationalist".

ON/OFFLINE ROUTINE PLAYS A LARGE ROLE IN CONSUMER HABITS

Commuters want to see a rundown of the morning/afternoon/evening news, while avid TV news watchers expect similar patterns online.

RAPID PROTOTYPING

I don't typically dive directly into high fidelity at this stage, but we were on a time crunch and had something great up our sleeves that made it possible.

My team and I had been creating a modular design system that we had been working diligently on making easy for our clients to build in our Layout Manager. We had begun building a library of modules within Sketch for easy drag and drop wire-framing that allowed for us to maintain rules within the system, making it possible to tackle quick high fidelity prototyping, and super simple for us to make small changes across multiple frames via the master templates.

The most time consuming portion for me was filling in the content. Thankfully, the Craft JSON api made it a breeze - I was not only able to bring in actual breaking news, but I could connect it with our own producer content to quickly present the differences in layout with live sites on our platform. Pretty cool.

FIRST ITERATION

With the new information we received from our first round of interviews and testing, I worked closely with our web team to put together a working prototype to test alongside the designs I used in the initial round of interviews. Zeplin has been an invaluable communications tool for us - it breaks down all of the elements from sketch into HTML/CSS. Our overseas devs love it.

To reduce distraction, we connected the prototypes to area-specific news feeds from our current platform to match the participant's location.

DESIGN VALIDATION

I was faced with potential location bias: we were testing in San Francisco - most of our clients are based in the south & east coast. Could there possibly be some information I'm not able to gather by pin-holing my view to SF? That in mind, I set up two more sessions in New York and Philadelphia, this time inviting some coworkers to sit and observe, even participate a bit in the interviewing process to get a sense of their skill and begin teaching my research methodology.

I wanted to make sure we could continue internally testing without my having to be there to lead every session, so I trained a handful of colleagues in our sister offices to cover multiple areas.

I am a big supporter of the 5 user rule in usability testing. Aside from it being time/cost efficient, it allows you to be extremely agile in your research methods. However, in order for it to work, you must A) select your users intelligently, B) have a tight focus on your research objectives, and C) supplement with larger numbers of response, like a survey, so you can make sure you can really observe a strongly supported standard of deviation.

DISCOVERY

I WAS SHOCKED TO DISCOVER A MAJOR POLARITY IN USERS' REACTIONS TO THE NEW DESIGNS.

Some loved it, stating it was "easy to read", looked "informative", and invited a "sense of calm" which they appreciated. On the other hand, other users considered it "boring" - they wanted to see more movement, more color, more action shots. Something to "suck them in".

This was great news - I was beginning to see some patterns in browsing preference that could be connected to language, and potentially other indicators like age, location, and education level.

THE OUTLIER

HURRICANES SWEPT IN ANOTHER USER

In the wake of hurricanes Harvey and Irma and the Napa fires, I had been witness to some of my dearest friends struggling to navigate (and help their loved ones navigate) one of the scariest moments of their lives.

I watched as people used Facebook to locate and help people in need. I saw an incredible spike in communication/mapping/weather tracking app downloads, as well as sign ups for news notifications. Friends from ABC News told me they had experienced a 300% traffic increase during Hurricane Harvey - this is clearly an area that could use some attention.

Local news stations have the incredible advantage of disseminating crucial, detailed information about an area's surroundings during a time of crisis. Most of them just don't have up-to-date systems in place to do so.

I conducted 8 interviews with people who were either directly affected by the hurricanes or who had been the main resource for helping their loved ones navigate the devastation.

A participant discusses her experience in turning to her daughter for hurricane navigation. Her story was picked up by the local news station, whose Facebook post went viral. She was eventually found and rescued.

DISCOVERY

user's preferences change when they go from "browsing" to "information hunting"

This was important to me for several reasons, the most influential being the need for us to enable a station to momentarily "take over" the design and content of their site during a time of crisis or major breaking news. Our new modular approach allowed for easy drag-and-drop layout changes, and with this new insight into user preferences, we were able to validate several features into high priority. We now had a story to back up our suspicions.

Users who preferred "sensationalist" headlines, photos and flashy interaction while they routinely browsed stories actually switched to OPPOSITE preferences (informative, straight forward, limited distraction) in times of acute information seeking.

This could also be applied to users who entered the site with the mindset of getting specific information about a topic they had already heard about, or going deeper into the site with related stories. What this meant for me was that one user type could change circumstantially. It also gave me some ideas for features that could further engage users to go deeper into the site.

PERSONAS

SO, WHO ARE WE DESIGNING FOR?

Based on motivations and design preferences across 60 million potential users, I came up with four solid personas, taking care to flesh out their motivations, typical journeys, potential points of friction, device usage, and potential design solutions for review.

At this stage, I worked closely with our engineers and PMs to get a sense of what our current feature roadmap was to make sure my solutions were within the parameters of our current abilities.

SYNTHESIS

PATTERNS REVEALED

At this point, I was now able to uncover a laundry list of best practices to keep in mind when designing these site templates, as well as begin to formulate focused questions to ask stations while trying to identify a station's users' preferences at the time of design onboarding.

I would share more, but there's only so much I can reveal about our findings. Here are few of the big ones that made a decent impact.

PERSONAS II

NEWS STATIONS

At this time, ongoing user research was underway and it was time to do the same kind of bucketing with our current and prospective clients as I had done with the end users.

Not only are we designing a frontend experience, but we have to continuously keep in mind how stations are publishing their content and what kind/quality of content we have to display.

Fortunately for me, Frankly has an incredible Client Services team in place, many who have been journalists and digital producers. I took this time to sit a few of them down to get a rundown of the different "types" of local news stations that are out there, breaking them down by size, content + design, and marketing angles.

Paired with my previous on-site research, I came away with some great initial personas.

REVELATION

CONNECTING THE DOTS

I took these four station types and put them up on a white board.

I then printed out a big stack of known and lesser known news sites, and started grouping them by common design patterns (text heavy, image heavy, color choices, position of content, type of content, etc.). Assuming most national stations have a powerhouse of researchers to A/B test their designs for higher engagement, I was curious to see if there was any visible correlation between station "brands" and the types of users known to frequent their sites.

When I realized there was a very clear pattern between the news station type and preferred design elements amongst user groups, I knew I was onto something.

TEMPLATES

We have been able to present early design options to clients during the onboarding process. Having gone from showing individual module features to suggested configurations (much like graduating from single lego pieces to full models), we have been able to cut our client conversations down tremendously.

RESULTS

Backed by research, set the foundation for a scalable templating system, empowering the client services team to successfully shave the development process down from 14-16 weeks to 4 weeks.

Not to mention, our head of design doesn't have to be on those calls anymore...not unless he wants to.

I consider that a win.